Handling fix results for streaming

Nick Chen

Nick ChenAugust 28, 2024

Handling fix results for streaming

In Pt. 1, we described the new streaming architecture we built out to reduce the latency and cost of validation. The new architecture brings lots of benefits, but also complicates some existing use cases, like fix actions. Here we’ll describe how we deal with these cases.

Fix Actions

Guardrails lets you specify an action to take when a particular validator fails. Some validators allow you to specify “FIX” as the on_fail action, which means the validator will programmatically fix the faulty LLM output.

For example, our Detect PII validator would do a fix as follows:

LLM Output: John lives in San Francisco. Fix Output: <PERSON> lives in <LOCATION>

Our lowercase validator does a fix as follows:

LLM Output: JOHN lives IN san FRANCISCO. Fix Output: john lives in san francisco.

In a non streaming scenario, we can pipe the fix result of a past validator into the next validator.

// running detect PII LLM Output: JOHN lives IN san FRANCISCO. Fix Output: <PERSON> lives IN <LOCATION> // now running lowercase LLM Output: <PERSON> lives IN <LOCATION> Fix Output: <person> lives in <location>

Fix actions are a fantastic way to provide responses to a user even when an LLM has violated a policy - in many cases, it’s better than simply getting rid of the entire response outright. However, it poses a few challenges for streaming.

Streaming and fix actions

As mentioned in Pt 1, we recently changed our streaming implementation to allow validators to specify how much context they should accumulate before running validation.

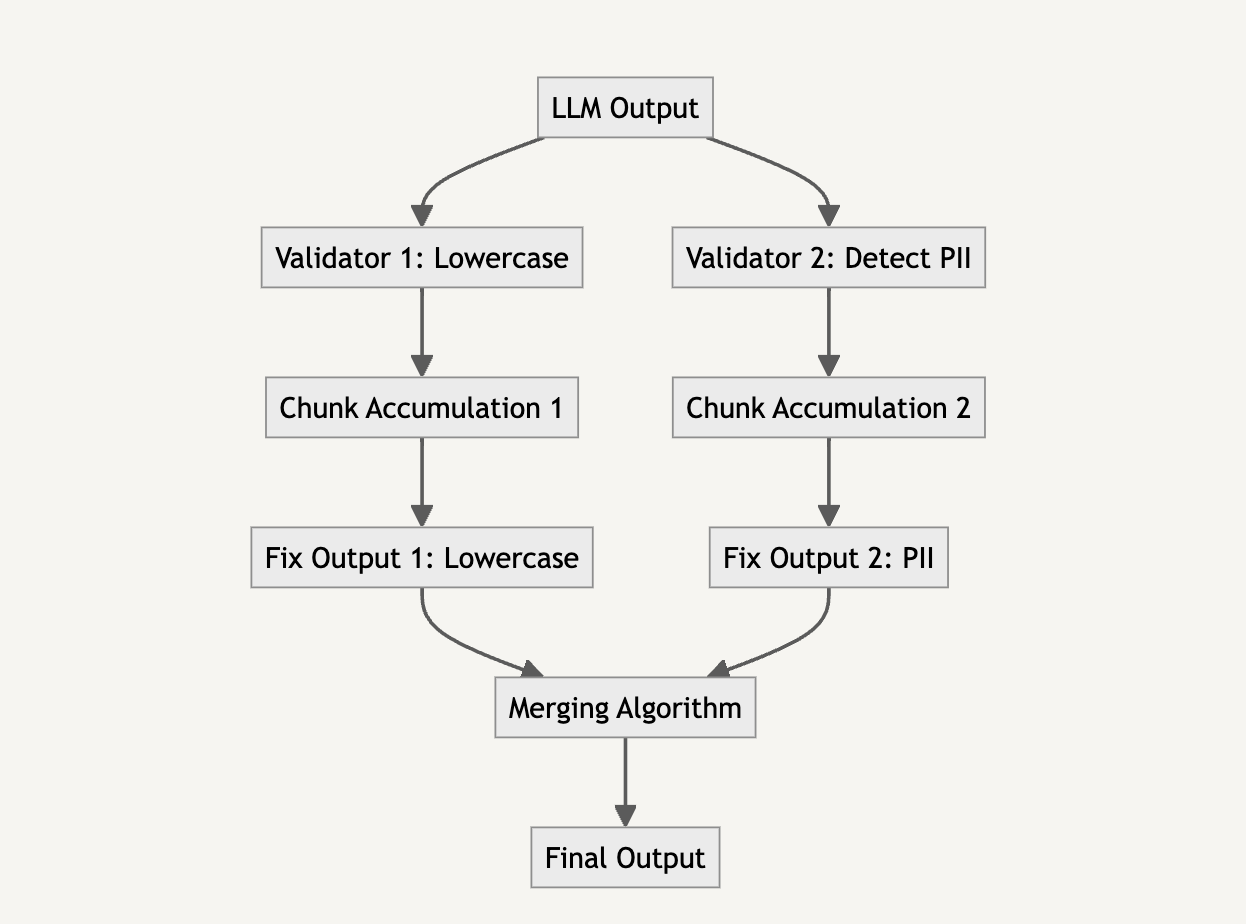

However, since each validator has a different chunk threshold for running validation, we cannot run validators in sequence. Each validator is also accumulating chunks independently, and does not know what fixes other validators have applied.

The fix

Our solution is to wait until all validators have accumulated enough chunks to validate and output a fix value, then to run a merging algorithm on their fixes.

For example, say we have a lowercase validator and a detect PII validator with the following fix values:

LLM Output: JOE is FUNNY and LIVES in NEW york PII Fix Output: <PERSON> is FUNNY and lives in <LOCATION> Lowercase Fix Output: joe is funny and lives in new york

Our merge algorithm would merge the two into the following:

<PERSON> is funny and lives in <LOCATION>

Underneath the hood, our merging algorithm is a modified version of the three-merge package, which uses Google’s diff-match-patch algorithm.

Caveats

Not many validators specify fix cases, and those that usually work quite well with our merging algorithm. However, it isn’t perfect. The merging algorithm can mangle output in some edge cases, mostly revolving around cases where replacement ranges overlap between multiple validators. If you run into any bugs with stream fixes, please file an issue on the Guardrails repo and mention @nichwch.

This diagram illustrates the process where the LLM output is processed by two validators—one for lowercase adjustments and one for PII detection. Each validator accumulates chunks independently and generates a fix output. The merging algorithm then combines these fixes into a final output.

Similar ones you might find interesting

Guardrails AI and NVIDIA NeMo Guardrails - A Comprehensive Approach to AI Safety

These two frameworks combine to provide a robust solution for ensuring the safety and reliability of generative AI applications.

Introducing the AI Guardrails Index

The Quest for Responsible AI: Navigating Enterprise Safety Guardrails

New State-of-the-Art Guardrails: Introducing Advanced PII Detection and Jailbreak Prevention on Guardrails Hub

We are thrilled to announce the launch of two powerful new open-source validators on the Guardrails Hub: Advanced PII Detection and Jailbreak Prevention.