Announcing Guardrails AI 0.2.0

Shreya Rajpal

Shreya RajpalSeptember 7, 2023

Categories:

🎉Exciting News! The team has been hard at work and is excited to announce that the latest release of guardrails, v0.2.0, is now live!

With the latest release, we now support multiple methods of initializing a Guard (including Pydantic!), a new ProvenanceV1 guardrail and several usability improvements.

Recap — what is Guardrails AI?

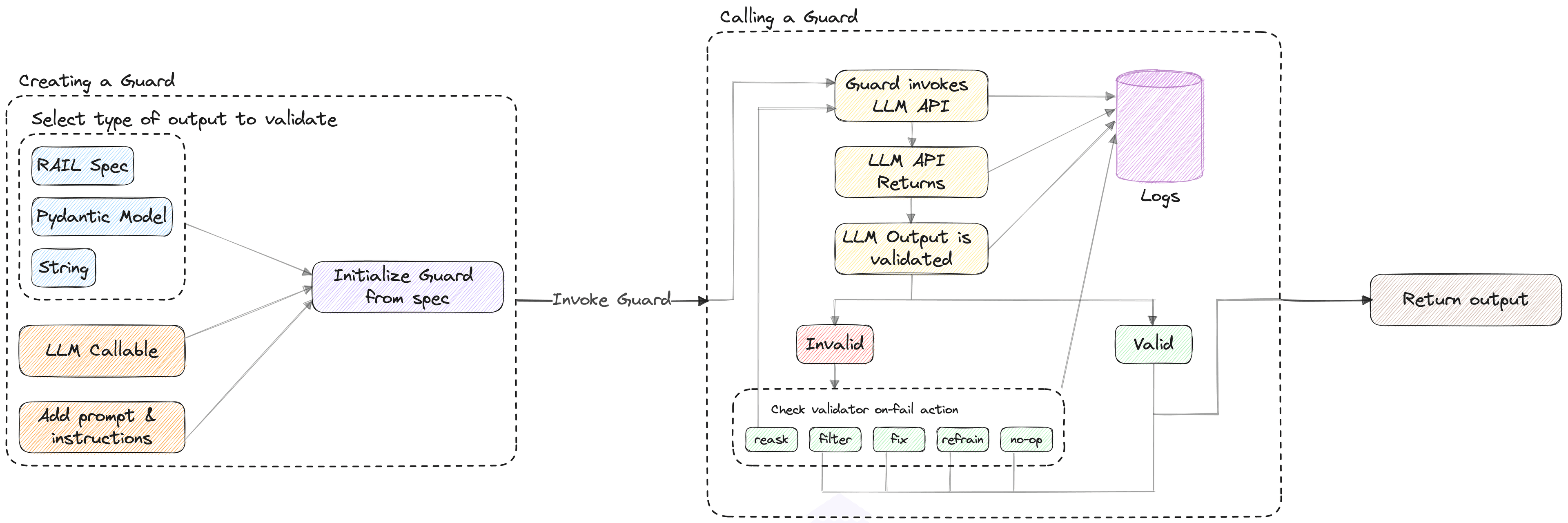

Guardrails AI allows you to define and enforce assurance for AI applications from structuring output to quality controls. Guardrails AI does this by creating a firewall-like bounding box around the LLM application (a Guard) that contains a set of validators. A Guard can include validators from our library or a custom validator that could enforce what your application is intended to do.

Native pydantic support

In addition to creating a Guard from a RAIL spec, we now support Pydantic as a first class citizen in Guardrails. This means that you can embed Guardrails directly in your LLM application.

E.g., you can create a Guard in the following manner:

from pydantic import BaseModel, Field from guardrails import Guard class Director(BaseModel): """Name and birth year of a movie director.""" name: str = Field(..., description="First and last name", validators=TwoWords()) birth_year: int class Movie(BaseModel): """Metadata about a movie""" name: str = Field(..., description="Name of movie") director: Director release_year: int guard = Guard.from_pydantic(Movie)

To check out more details about how to use Guardrails with Pydantic, see the Pydantic documentation page.

Guards for string outputs

In spirit of the several usability improvements we've been making for how you can interact with Guardrails directly in your code, we've created the ability to guard against simple string outputs in a low-friction way. This is an alternative to defining Guardrails via the RAIL XML spec or the Pydantic model.

To check out more details, see the documentation page on how to define quick Guardrails for string outputs.

Creating a Guard for strings via XML

<rail version="0.1"> <output type="string" format="two-words" on-fail-two-words="reask" /> </rail>

Creating a Guard for strings via Code

from guardrails import Guard from guardrails.validators import ValidLength guard = Guard.from_string( validators=[ValidLength(10, 20)] description="Puppy name" )

Provenance guardrails

As part of the 0.2.0 release, we have published a ProvenanceV1 guardrail. ProvenanceV1 enables customers to validate the output of an LLM with another LLM given a provided set of contexts. For more details, see the ProvenanceV1 documentation page.

Breaking changes

- Refactoring string templates: We now use Template.safe_substitute to avoid unnecessary escapes.

- Refactoring variable references: As part of the string templating change, variables need to now be referenced ${my_var} instead of {{my_var}}. For namespacing, primitives should now be referenced as ${gr.complete_json_suffix_v2} instead of @complete_json_suffix_v2.

Other changes

- Refactoring validators: We complete refactored Guardrails validators so that it's much easier to create custom validators or pass custom runtime information to them. More information for customer validators can be found here. See examples of how custom metadata can be passed here.

- Refactoring choice tags: The previous version to specify choices was verbose. We've refactored it to a simpler structure that is OpenAPI-consistent.

- Shiny new docs! Restructuring documentation with more Pydantic examples, etc.

Migration guide

For more details on how to migrate to 0.2.0 please see our migration guide.

Take it for a spin!

You can install the latest version of Guardrails with:

pip install guardrails-ai

There are a number of ways to engage with us:

- Join the discord: https://discord.gg/kVZEnR4WQK

- Star us on Github: https://github.com/guardrails-ai/guardrails

- Follow us on Twitter: https://twitter.com/guardrails_ai

- Follow us on LinkedIn: https://www.linkedin.com/company/guardrailsai/

We're always looking for contributions from the open source community. Check out guardrails/issues for a list of good starter issues.

Tags:

Similar ones you might find interesting

Guardrails AI and NVIDIA NeMo Guardrails - A Comprehensive Approach to AI Safety

These two frameworks combine to provide a robust solution for ensuring the safety and reliability of generative AI applications.

Introducing the AI Guardrails Index

The Quest for Responsible AI: Navigating Enterprise Safety Guardrails

New State-of-the-Art Guardrails: Introducing Advanced PII Detection and Jailbreak Prevention on Guardrails Hub

We are thrilled to announce the launch of two powerful new open-source validators on the Guardrails Hub: Advanced PII Detection and Jailbreak Prevention.